- Published on

Edge AI Deploying Machine Learning Models on Edge Devices

- Authors

- Name

- Adil ABBADI

Introduction

The proliferation of IoT devices has led to an exponential growth in data generation, making it imperative to process and analyze this data in real-time. Edge AI, a subset of Artificial Intelligence, addresses this challenge by deploying machine learning models directly on edge devices, such as smart cameras, robots, and autonomous vehicles. This approach enables real-time processing, reduced latency, and improved security, making it a crucial technology for various industries. In this blog post, we will delve into the world of Edge AI, exploring its benefits, challenges, and deployment strategies for machine learning models on edge devices.

- Benefits of Edge AI

- Challenges of Edge AI

- Deployment Strategies for Machine Learning Models on Edge Devices

- Practical Example of Edge AI Deployment

- Conclusion

- Ready to Master Edge AI?

Benefits of Edge AI

Edge AI offers several advantages over traditional cloud-based AI approaches:

Real-time Processing

Edge devices can process data in real-time, enabling immediate action and response to changing conditions. This is particularly critical in applications like autonomous vehicles, where latency can be a matter of life and death.

Reduced Latency

By processing data closer to the source, Edge AI reduces latency, making it suitable for applications requiring instant feedback, such as smart home automation or industrial control systems.

Improved Security

Edge AI reduces the risk of data breaches by minimizing the amount of data transmitted to the cloud or centralized servers. This is particularly important in industries like healthcare, finance, and defense, where data security is paramount.

Increased Efficiency

Edge devices can operate independently, reducing the need for constant communication with the cloud or centralized servers, resulting in lower bandwidth usage and improved overall efficiency.

Challenges of Edge AI

While Edge AI offers numerous benefits, it also presents several challenges:

Limited Computational Resources

Edge devices have limited processing power, memory, and storage capacity, making it essential to optimize machine learning models for deployment on these devices.

Data Quality and Quantity

Edge devices generate vast amounts of data, which can be noisy, incomplete, or inconsistent. Ensuring data quality and relevance is crucial for accurate model performance.

Model Deployment and Management

Deploying and managing machine learning models on edge devices can be complex, requiring specialized tools and expertise.

Security and Updates

Edge devices are vulnerable to security breaches, and ensuring timely updates and patches to prevent exploitation is essential.

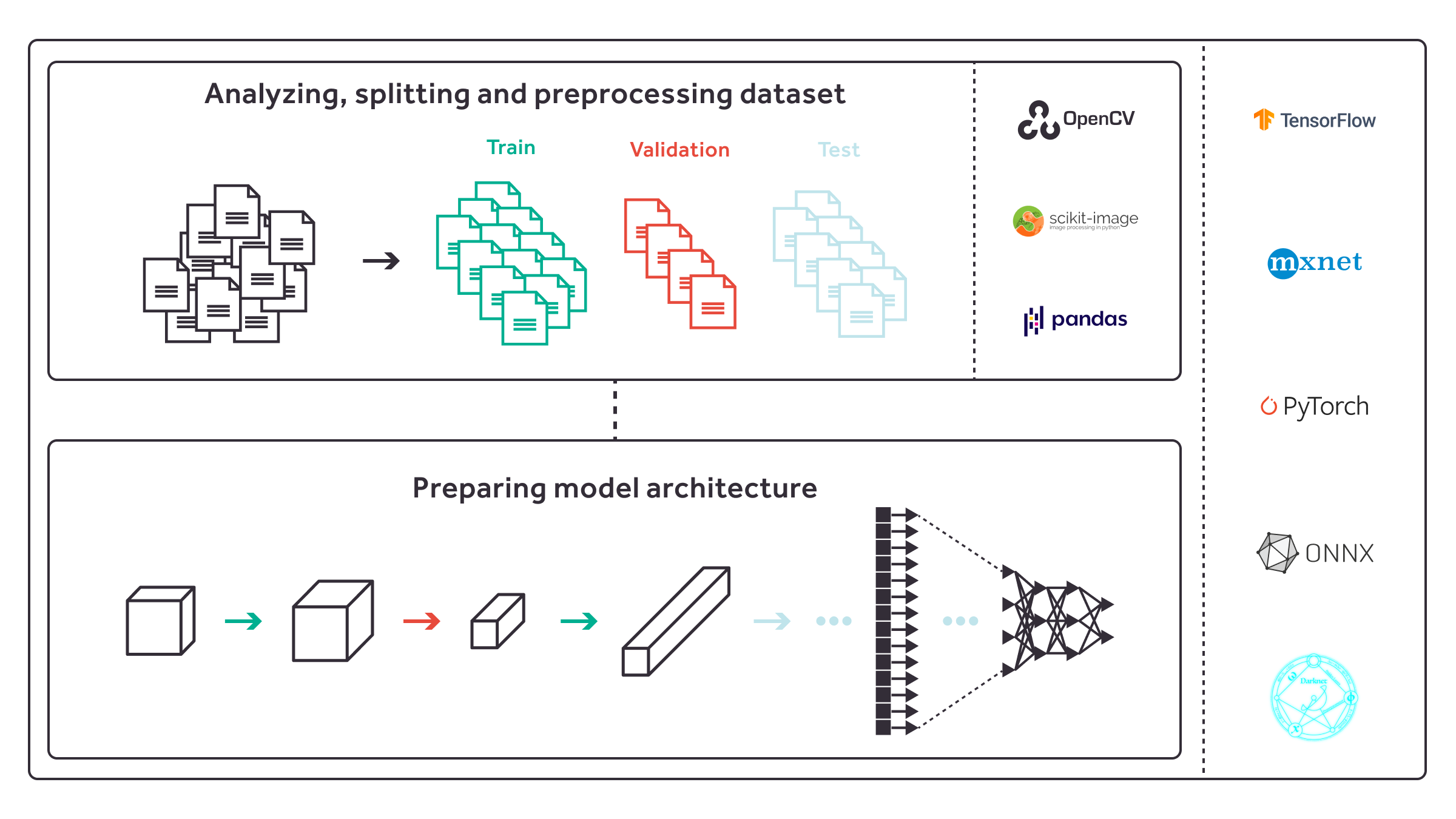

Deployment Strategies for Machine Learning Models on Edge Devices

To deploy machine learning models on edge devices, several strategies can be employed:

Model Pruning and Quantization

Model pruning reduces the complexity of machine learning models by eliminating redundant or unnecessary weights and connections. Quantization compresses the model's precision from 32-bit floating-point numbers to 8-bit integers, reducing memory usage and computational requirements.

Knowledge Distillation

Knowledge distillation involves training a smaller model (the student) to mimic the behavior of a larger, pre-trained model (the teacher). This approach enables the student model to learn the essential features and patterns from the teacher model, making it suitable for deployment on edge devices.

Edge-Aware Model Design

Edge-aware model design involves designing machine learning models from scratch, taking into account the limitations and constraints of edge devices. This approach ensures that the model is optimized for deployment on edge devices, reducing the need for pruning or quantization.

Containerization and Orchestration

Containerization and orchestration tools, such as Docker and Kubernetes, enable efficient deployment and management of machine learning models on edge devices. These tools provide a standardized environment for model deployment, ensuring consistency and reproducibility across different edge devices.

Practical Example of Edge AI Deployment

Here's a practical example of deploying a machine learning model on an edge device using TensorFlow Lite and Raspberry Pi:

import tensorflow as tf

import numpy as np

# Load the pre-trained model

model = tf.keras.models.load_model('model.h5')

# Convert the model to TensorFlow Lite format

converter = tf.lite.TFLiteConverter.from_keras_model(model)

tflite_model = converter.convert()

# Save the TensorFlow Lite model to a file

with open('model.tflite', 'wb') as f:

f.write(tflite_model)

# Deploy the model on Raspberry Pi using TensorFlow Lite

import tflite_runtime.interpreter as tflite

interpreter = tflite.Interpreter('model.tflite')

interpreter.allocate_tensors()

# Get input and output tensors

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# Set input data

input_data = np.random.rand(1, 224, 224, 3)

interpreter.set_tensor(input_details[0]['index'], input_data)

# Run the inference

interpreter.invoke()

# Get output

output_data = interpreter.get_tensor(output_details[0]['index'])

print(output_data)

Conclusion

Edge AI is revolutionizing the way we approach machine learning applications, enabling real-time processing, reduced latency, and improved security. By understanding the benefits and challenges of Edge AI and employing strategies like model pruning, knowledge distillation, and edge-aware model design, we can deploy machine learning models on edge devices efficiently. As the Edge AI landscape continues to evolve, it's essential to stay updated with the latest developments and advancements in this field.

Ready to Master Edge AI?

Start exploring the world of Edge AI today and discover the limitless possibilities of deploying machine learning models on edge devices.