- Published on

Kafka Streaming Data Processing and Integration Unlocking Real-Time Insights

- Authors

- Name

- Adil ABBADI

Introduction

At the heart of modern data infrastructure lies the ability to process and analyze data in real time. Apache Kafka, a distributed streaming platform, has become the backbone for streaming data, enabling organizations to build robust, scalable, and fault-tolerant solutions for data processing and integration. In this article, we’ll explore how Kafka empowers streaming data workflows, examine its core processing paradigms, and discuss integration patterns that help you get the most out of your real-time data.

- Kafka Architecture and Streaming Fundamentals

- Stream Processing with Kafka Streams

- Integrating Kafka with External Systems

- Conclusion

- Start Your Streaming Journey Today

Kafka Architecture and Streaming Fundamentals

Apache Kafka is built on the publish-subscribe messaging principle, optimized for high-throughput and low-latency messaging. It organizes data into topics, allows multiple producers and consumers, and distributes workload across partitions for massive scalability.

Kafka's role as a distributed log means streaming applications can consume, process, and replay streams of data. Its fault-tolerant design ensures data isn’t lost in case of failure.

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer<String, String> producer = new KafkaProducer<>(props);

producer.send(new ProducerRecord<>("my-topic", "key", "message payload"));

producer.close();

Kafka can serve as both the “source of truth” (persistent log) and the backbone of stream processing operations.

Stream Processing with Kafka Streams

Kafka Streams is a lightweight Java library for building real-time applications and microservices, natively integrating with Kafka topics. Unlike external stream processors, it lets you write processing logic as regular Java code, making it easy and intuitive for developers.

Key features include event-time processing, windowing, joins, and exactly-once semantics:

StreamsBuilder builder = new StreamsBuilder();

KStream<String, String> source = builder.stream("input-topic");

KStream<String, String> uppercased = source.mapValues(value -> value.toUpperCase());

uppercased.to("output-topic");

KafkaStreams streams = new KafkaStreams(builder.build(), props);

streams.start();

Kafka Streams applications automatically scale, maintain state locally, and recover state after crashes.

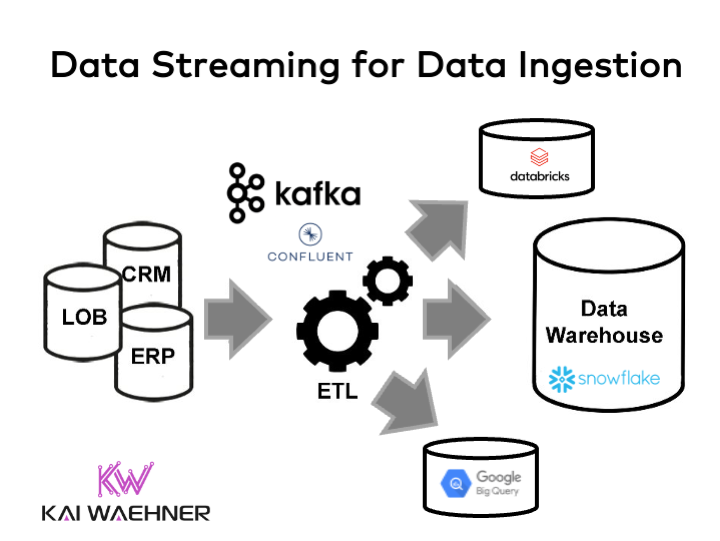

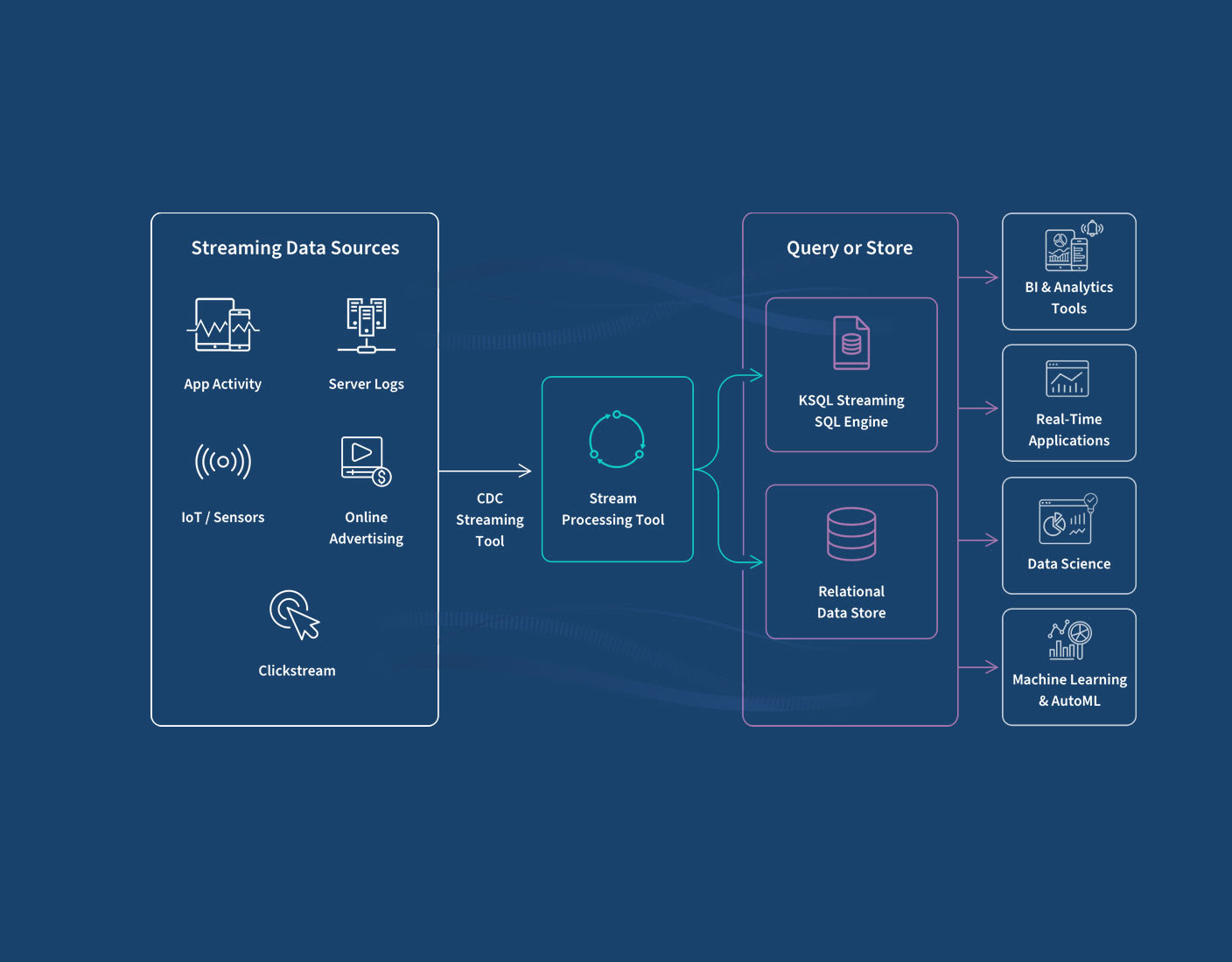

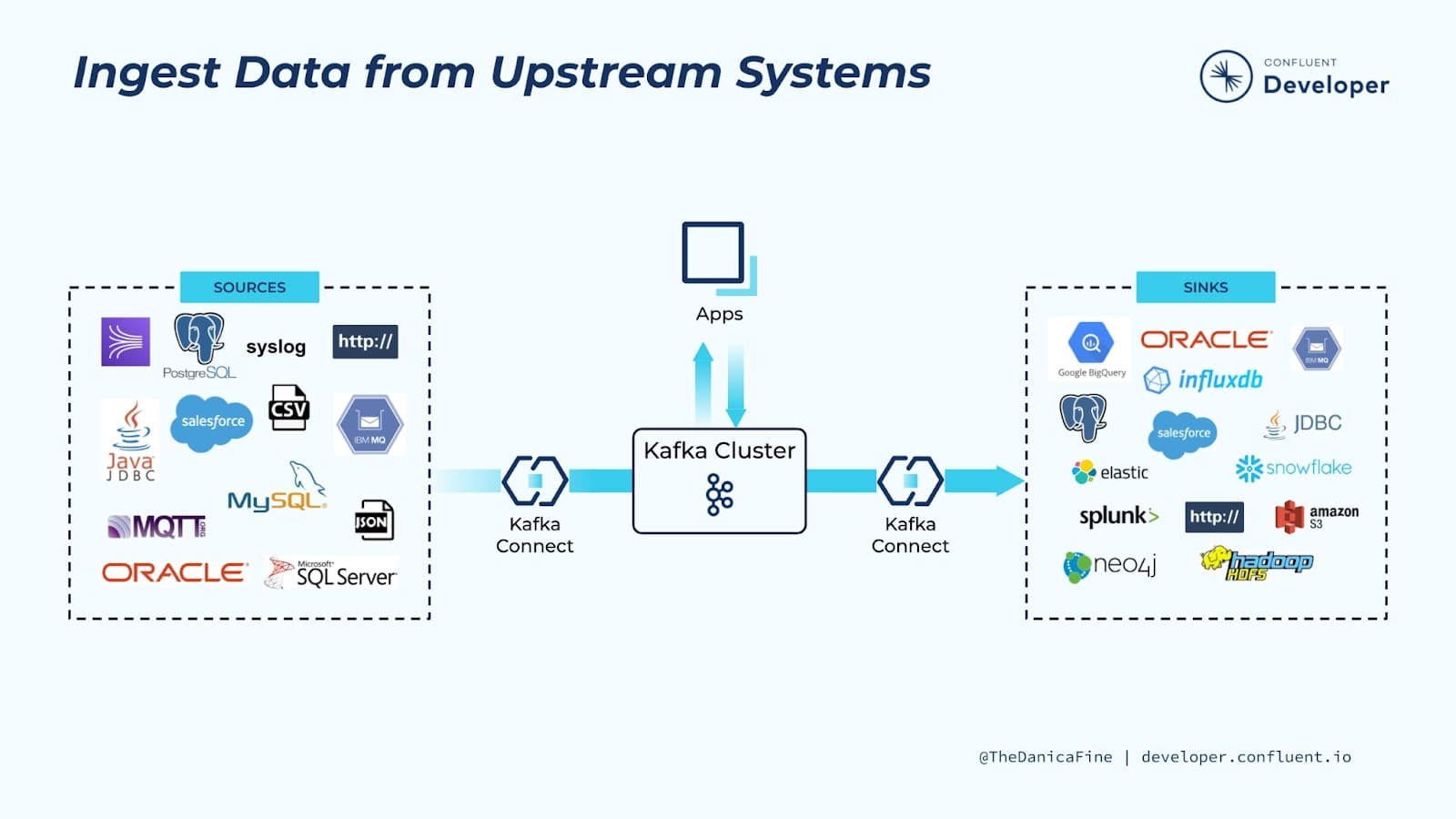

Integrating Kafka with External Systems

For a streaming platform to be truly valuable, robust integration with legacy systems, databases, analytics services, and cloud platforms is essential. Kafka Connect—a framework for scalable and fault-tolerant integration—enables this with out-of-the-box connectors for hundreds of systems.

With Kafka Connect, moving data in and out of Kafka becomes declarative and manageable:

{

"name": "jdbc-sink-connector",

"config": {

"connector.class": "io.confluent.connect.jdbc.JdbcSinkConnector",

"tasks.max": "1",

"topics": "output-topic",

"connection.url": "jdbc:postgresql://localhost:5432/mydb",

"auto.create": "true"

}

}

Kafka integrations extend to AWS Kinesis, Google BigQuery, Elasticsearch, Hadoop, and more via connectors or custom consumers and producers.

Conclusion

Apache Kafka has revolutionized streaming data processing and integration by making it scalable, reliable, and easy to extend. Combining the power of Kafka’s distributed messaging with stream processing libraries and robust system integration frameworks unlocks real-time analytics and event-driven architectures across industries.

Start Your Streaming Journey Today

Kafka is the backbone of countless modern data architectures. Dive deeper into Kafka Streams, explore Kafka Connect’s connectors, and experiment with end-to-end streaming pipelines to harness real-time insights in your own projects!