- Published on

Redis Caching Strategies A Comprehensive Guide for Developers

- Authors

- Name

- Adil ABBADI

Introduction

Redis is one of the most popular in-memory key-value stores, widely adopted for high-performance caching solutions. Its simplicity, speed, and versatility make it an indispensable tool for developers seeking to reduce database load and accelerate response times. In this article, we'll explore essential Redis caching strategies, walk through practical examples, and share best practices for optimal results.

- Choosing the Right Caching Strategy

- Advanced Strategies: LFU, LRU, and Beyond

- Distributed and Scalable Caching

- Conclusion

- Ready to Supercharge Your App?

Choosing the Right Caching Strategy

Selecting an appropriate caching strategy depends on your application's read/write patterns, data consistency requirements, and scalability goals. Here are three foundational approaches:

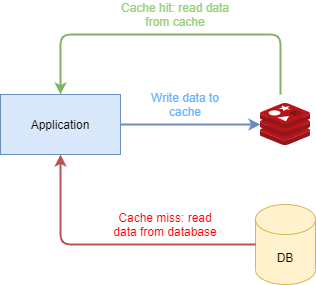

1. Cache-Aside (Lazy Loading)

Cache-Aside, or Lazy Loading, is the most common pattern: the application checks the cache first, then loads data from the database if missing and stores the result back into the cache.

def get_user(user_id):

cached_user = redis_client.get(f"user:{user_id}")

if cached_user:

return json.loads(cached_user)

user = db.query_user(user_id)

redis_client.setex(f"user:{user_id}", 3600, json.dumps(user)) # cache for 1 hour

return user

Pros:

- Simple to implement

- Ensures cache only stores actively accessed data

Cons:

- Can suffer from cache misses on cold start or infrequently accessed data

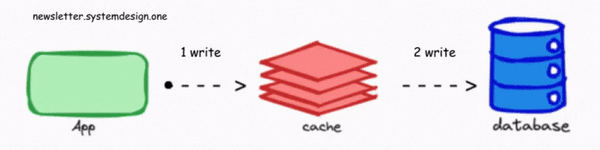

2. Write-Through Caching

Write-Through keeps the cache always up to date: when data is written, it’s stored both in Redis and the database simultaneously, ensuring consistency.

def create_user(user_id, user_data):

db.insert_user(user_id, user_data)

redis_client.set(f"user:{user_id}", json.dumps(user_data))

Pros:

- Cache never gets stale

- Simple reads from cache

Cons:

- Write latency increases (affects write-heavy workloads)

- Potential risk if one write fails

3. Cache Invalidation Strategies

Maintaining cache consistency with the database is vital. Common invalidation strategies include:

- Time-based Expiry (TTL): Assigning a time-to-live to cache entries.

- Explicit Invalidation: Removing keys manually after a database change.

def update_user(user_id, new_data):

db.update_user(user_id, new_data)

redis_client.delete(f"user:{user_id}") # Invalidate cache on update

Tip: Experiment with TTL values to balance between data freshness and cache hit rate.

Advanced Strategies: LFU, LRU, and Beyond

Redis supports built-in eviction policies like Least Recently Used (LRU) and Least Frequently Used (LFU) to manage memory:

# In redis.conf or via redis-cli

maxmemory 256mb

maxmemory-policy allkeys-lru

Use these when your working set might exceed available memory, letting Redis automatically evict less relevant data.

Key Considerations:

- Tune

maxmemoryandmaxmemory-policyfor your workload - Monitor eviction metrics to prevent unexpected data loss

Distributed and Scalable Caching

As your system grows, distributing your cache helps prevent bottlenecks:

- Sharding: Partition keys across multiple Redis instances (“Redis Cluster”).

- Replication: Use Redis replicas to increase availability and read throughput.

# Example Redis Cluster configuration (redis.conf)

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

Best Practices:

- Deploy Redis Sentinel for high availability

- Monitor latency and partition tolerance

Conclusion

Redis offers a rich set of caching strategies tailored to a variety of application needs. By understanding patterns like Cache-Aside, Write-Through, and appropriate invalidation techniques, you can drastically boost performance and scalability. Leverage advanced features and tune configurations for robust, production-ready caching solutions.

Ready to Supercharge Your App?

Experiment with these Redis caching strategies in your next project! Dive deeper into the Redis documentation, explore clustering and Sentinel for scale, and watch your application’s responsiveness soar. Happy caching!